Categories

‘Impact assessment on a shoestring’: exploring alternative methodologies for anticipatory action evidence

The evidence on what works and what doesn’t in anticipatory humanitarian action remains limited, while practitioners across the sector are keen to learn more. New research aims to explore alternative, leaner methodologies for impact assessments of anticipatory action interventions, aiming to help practitioners generate more and better evidence on what works and what needs to change.

The evidence base on anticipatory action is growing, but it remains limited overall. Most implementers of anticipatory action consider themselves to be humanitarian practitioners first and foremost, not researchers. It is little wonder, therefore, that many hesitate to invest in elaborate ways to generate evidence that may appear overly complicated or expensive. Beneficiary satisfaction surveys with small sample sizes are the norm, and there is no doubt that these can yield useful information. Often, however, they carry an inherent confirmation bias to detect ‘benefits’ rather than assessing whether, and to what extent, an intervention has had any discernible effects.

Exploring the middle ground between rigour and practicality

Toward the ‘rigorous’ end of the spectrum, one of the more common methodologies used to evaluate anticipatory action has been quasi-experimental design (as in this study from Bangladesh and in this paper). This approach compares the experiences of programme participants with non-participants in a statistically rigorous way. However, this methodology tends to be labour intensive and requires strong technical skills. While more established anticipatory action teams on the ground may be able to conduct such evaluations, those with fewer resources find them challenging.

There are no shortcuts to rigorous evaluation – but there is room to explore the middle ground between rigour and practicality. This blog post summarizes an exploration of alternative methodologies for assessing the effects of anticipatory action. The objective was to help lower the threshold for practitioners to generate ‘as good as possible’ evidence, with a methodology that can be better tailored to the resources and skills available across different implementation contexts.

A ‘lean yet robust’ assessment approach

We examined 18 methodologies, which can be found in the dissertation upon which this blog post is based. The methodologies include the quasi-experimental studies mentioned above, as well as more qualitative methods like ‘Most Significant Change’. Most were mixed-method approaches, which used some combination of qualitative and quantitative work. Several had primarily been used in longer-term development projects, but they could be tailored to anticipatory action.

Each was scored and ranked based on information about different requirements received from practitioner interviews, with the ‘Success Case Method’ emerging as the most appropriate approach for anticipatory action impact assessments. Their defining elements were assessed in the context of the different needs and resources available, according to diverse practitioner experiences. While Red Cross Red Crescent National Societies served as a reference point, their criteria for prioritization (capacity, prior research experience and preferences, cost and complexity of the methodology) are relevant to most implementing organizations.

The Success Case Method: a versatile option for impact assessment

The Success Case Method was chosen for several reasons. One of the most important factors raised in interviews with anticipatory action practitioners and other stakeholders was the need to make comparisons with the data, and not just look at success cases. The general Success Case Method includes a comparison, although not between beneficiaries and non-beneficiaries. A modified version can look at comparisons between those groups.

Additionally, capacity is an issue for many organizations implementing anticipatory action. They may not have the capacity for complex statistical analyses or sample design. The Success Case Method presents a straightforward approach, with limited sampling and easier statistical analysis – and is therefore appropriate for those with limited capacity. Other considerations were cost and complexity.

Previous studies using this methodology have noted that it was both practical and cost effective. While several other methodologies had similar attributes, the Success Case Method proved to meet slightly more of the qualifications required by interviewees.

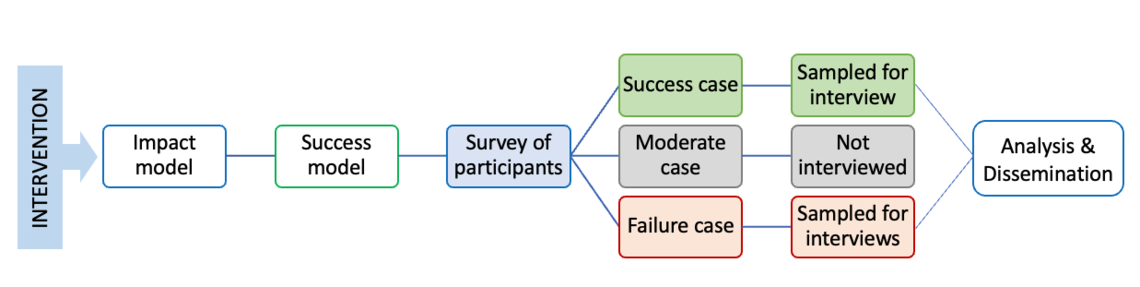

Figure 1 shows the general flow of the Success Case Method. In the ‘standard’ approach, there are four components:

- Impact model and model of success: Before assessing a programme or intervention, this methodology requires that both an impact model and model of success be developed. The impact model explains how the intervention is supposed to produce its impacts, akin to a theory of change. The model of success determines what will be classified as a success in the data. These can be developed by those conducting the assessment.

- Surveys: The first round of assessment is to survey all (or a sample) of those who received the intervention. This is designed to determine who is considered a success and whether there are any failures (in the sense of not corresponding to the model of success) among participants.

- Interviews: After the survey, a small sample of both success cases and failures are randomly selected for in-depth interviews to determine why the failures failed and why the success cases succeeded. The interviews probe possible explanations of success, with one being the anticipatory action intervention.

- Analysis and dissemination: The final step is to analyse the data, write up the conclusions and communicate them to the appropriate stakeholders.

Modifications for different levels of capacity and resources

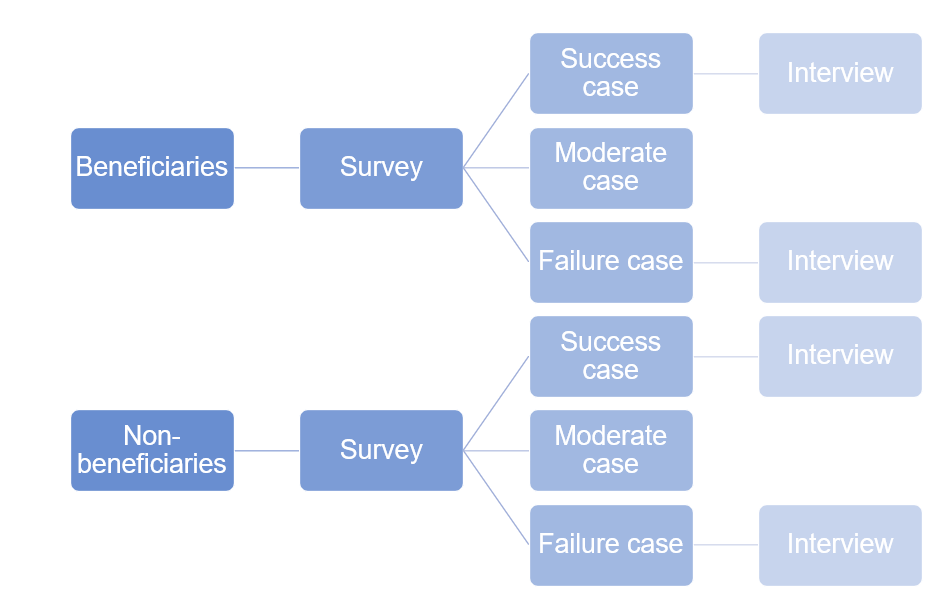

Our work discusses several modifications to the methodology design. These can be applied depending on the level of capacity available. The ‘standard’ approach shown above focuses only on the experiences of people who received anticipatory action assistance and compares ‘successes’ with ‘failures’ within this group. The first modification we propose (Figure 2) is to sample and survey both beneficiaries and non-beneficiaries, to create a comparison between those who received anticipatory action support and those who did not. This can be used by implementers who have more capacity, including sampling and statistical knowledge, as well as the funding to conduct larger studies.

This modification allows us to learn from the experiences and behaviours of disaster-affected people who didn’t receive anticipatory action assistance, but who may have fared relatively well (success cases), compared to beneficiaries who may not have been as fortunate.

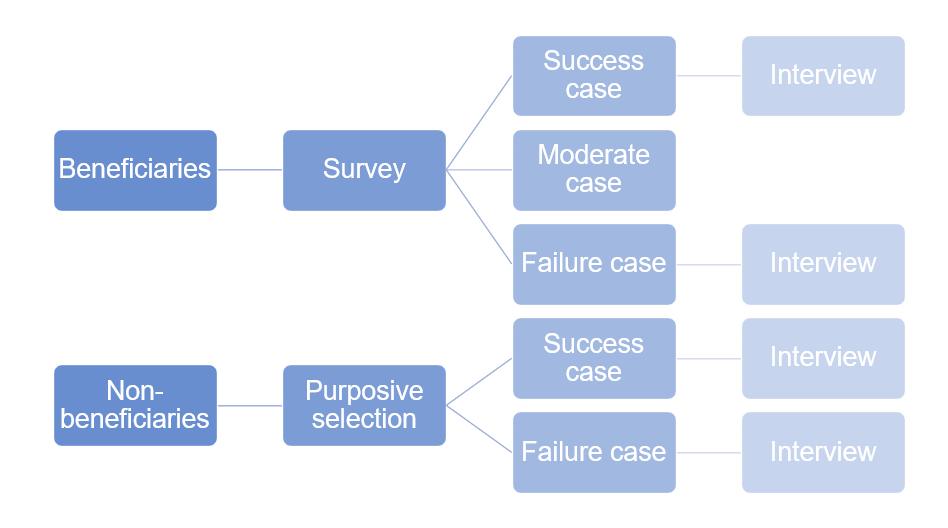

We propose a second modification (Figure 3) for implementers who do not have the capacity to collect data from a larger group of people, but who still want to learn from non-beneficiaries: they can be identified by purposive selection to conduct success and failure interviews. While this will not allow for statistical comparisons based on survey data, it does provide a rich, in-depth qualitative comparison between beneficiaries and non-beneficiaries.

Time to test the Success Case Method in practice

The various modifications shown here indicate that the Success Case Method is adaptable to the needs of different implementing organizations, and to different contexts. The method allows anticipatory action practitioners to build on their theories of change to define a model of success, and then use the varying combinations of quantitative and in-depth qualitative data to understand the drivers of success or failure – and whether the anticipatory action intervention has anything to do with it!

The Success Case Method has yet to be tested in the practice of anticipatory action. We have prepared a short, step-by-step brief on this approach for implementers who want to go beyond ‘beneficiary satisfaction surveys’ but who may not have the resources or capacity to go fully (quasi-) experimental. Time to give it a try. Let’s learn together!

This blog post was written by Selby Knudsen (Trilateral Research) and Clemens Gros (Red Cross Red Crescent Climate Centre). It is based on Selby’s MSc dissertation, ‘Impact Assessment on a Shoestring: Measuring the Impacts of Forecast-based Financing in Resource Limited Settings’, University College London. This dissertation provides more detailed information on how the four steps of the Success Case Method can be applied to anticipatory action impact assessments, including sampling techniques and sample size considerations, depending on the capacities of different organizations.

If you are interested in using the Success Case Method in your anticipatory action context, let Clemens know; he is happy to support you.

Featured photo by Bangladesh Red Crescent